DataSimulation

The test data tool.

The new standard for your test data.

In the highly regulated world of the financial and insurance industry, access to realistic test data is the decisive factor for quality, speed and innovative strength. The demand is clearly defined: test data must be safe, realistic and compliant.

The challenge:

Quality, safety and efficiency in harmony

Companies are faced with the challenging task of uniting three key requirements that seem contradictory at first glance:

High compliance requirements

Complex test scenarios

Agile development cycles

The dacon® approach:

From technical coercion to creative tools

We are convinced that the solution is not to compromise, but to redefine the possibilities. Our vision is to leave the technical bubble – and to provide the specialist users with an intuitive tool. A tool that enables them to act independently, quickly and safely and to contribute their expertise directly to the quality assurance process.

Our answer: dacon® DataSimulation –

The intelligent blueprint of your production data

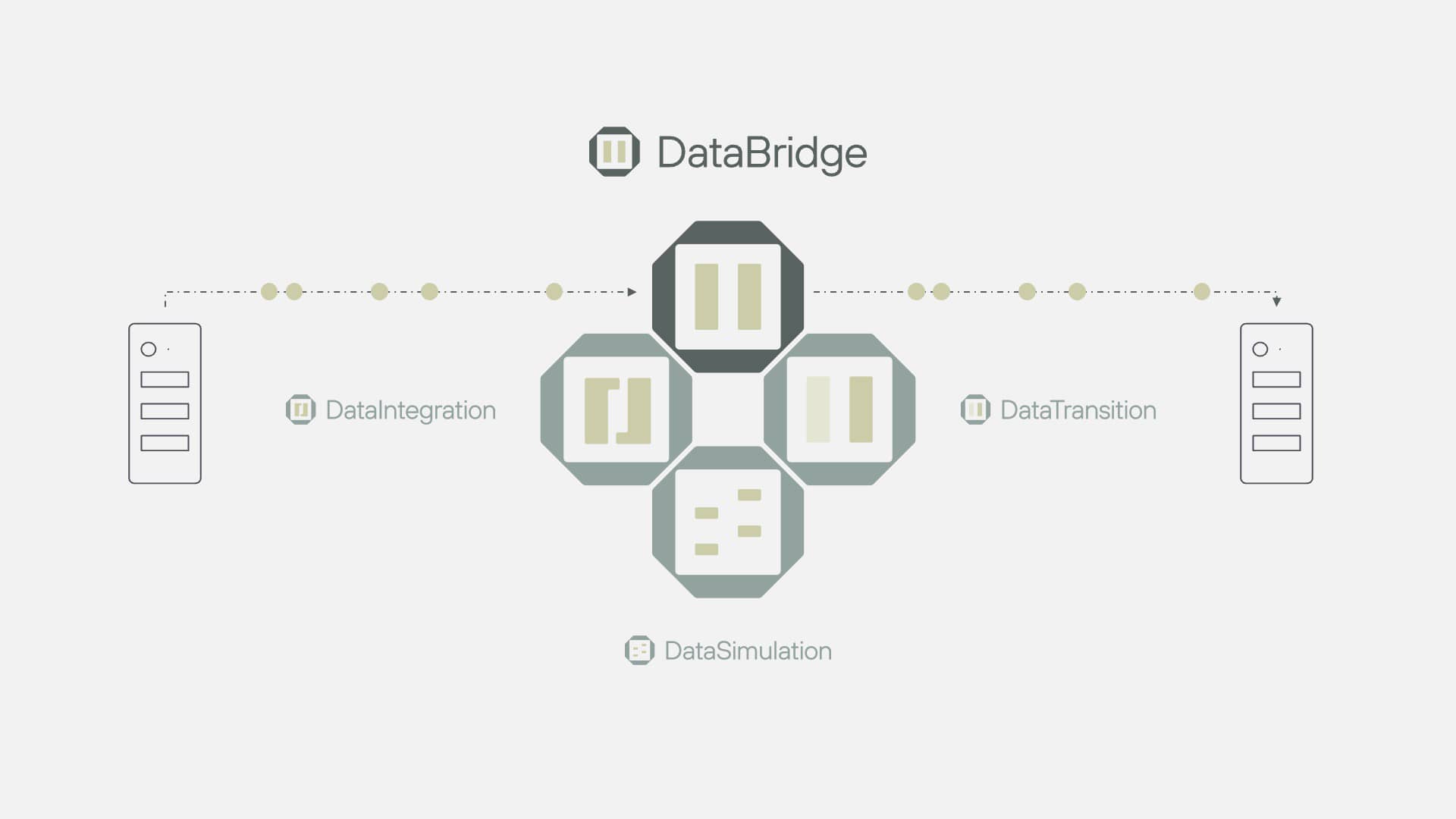

dacon® DataSimulation is not another anonymization tool. It is a specialized module of our proven dacon® DataBridge, which serves as a strategic blueprint for your data.

Copy selected business objects according to your individual requirements from source to goal (e.g. from your production environment to your development environment) at the touch of a button – structurally identical and provided with realistic, intelligent anonymization. We take on the complexity of the process so that you can focus entirely on your goals.

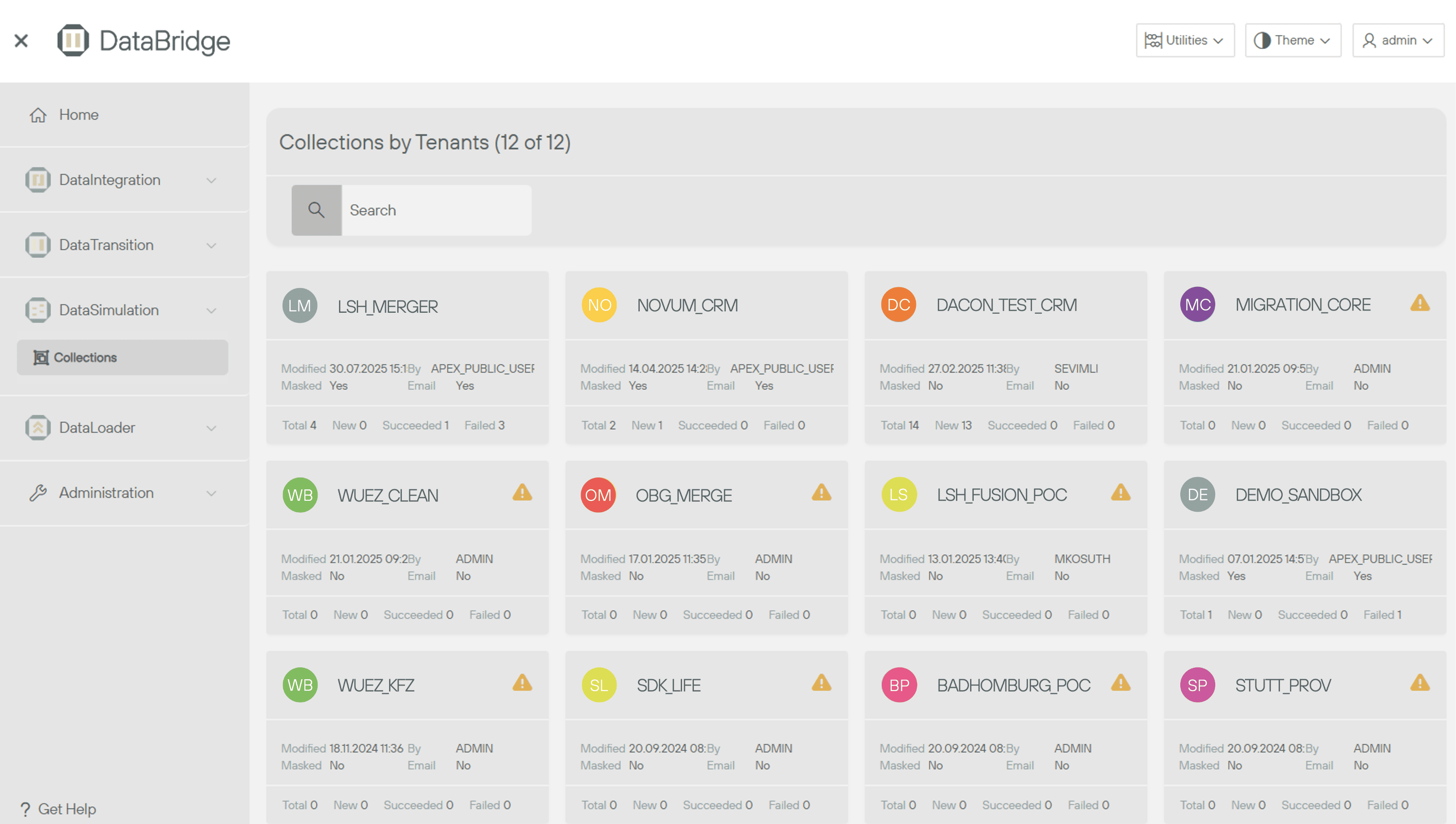

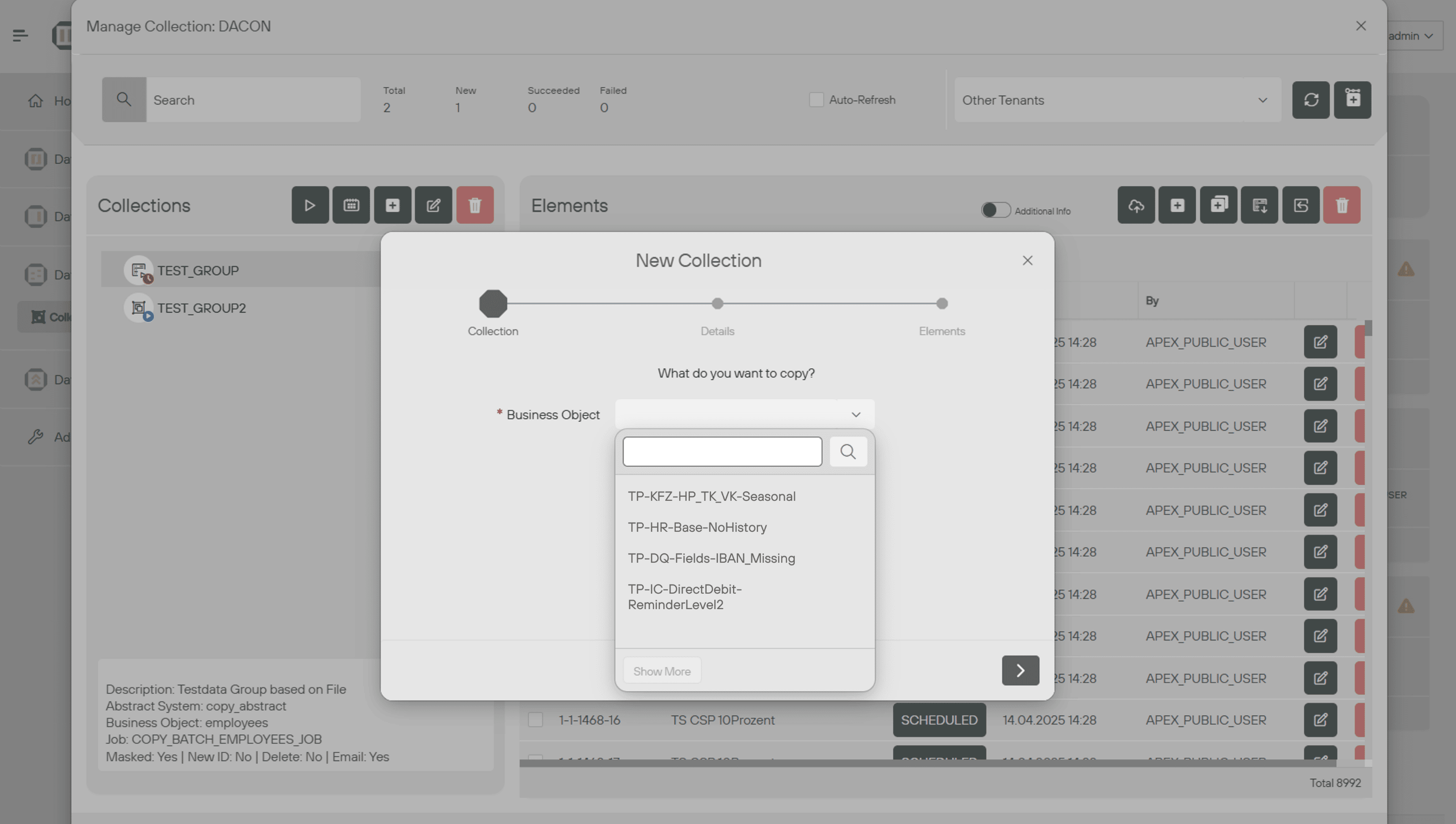

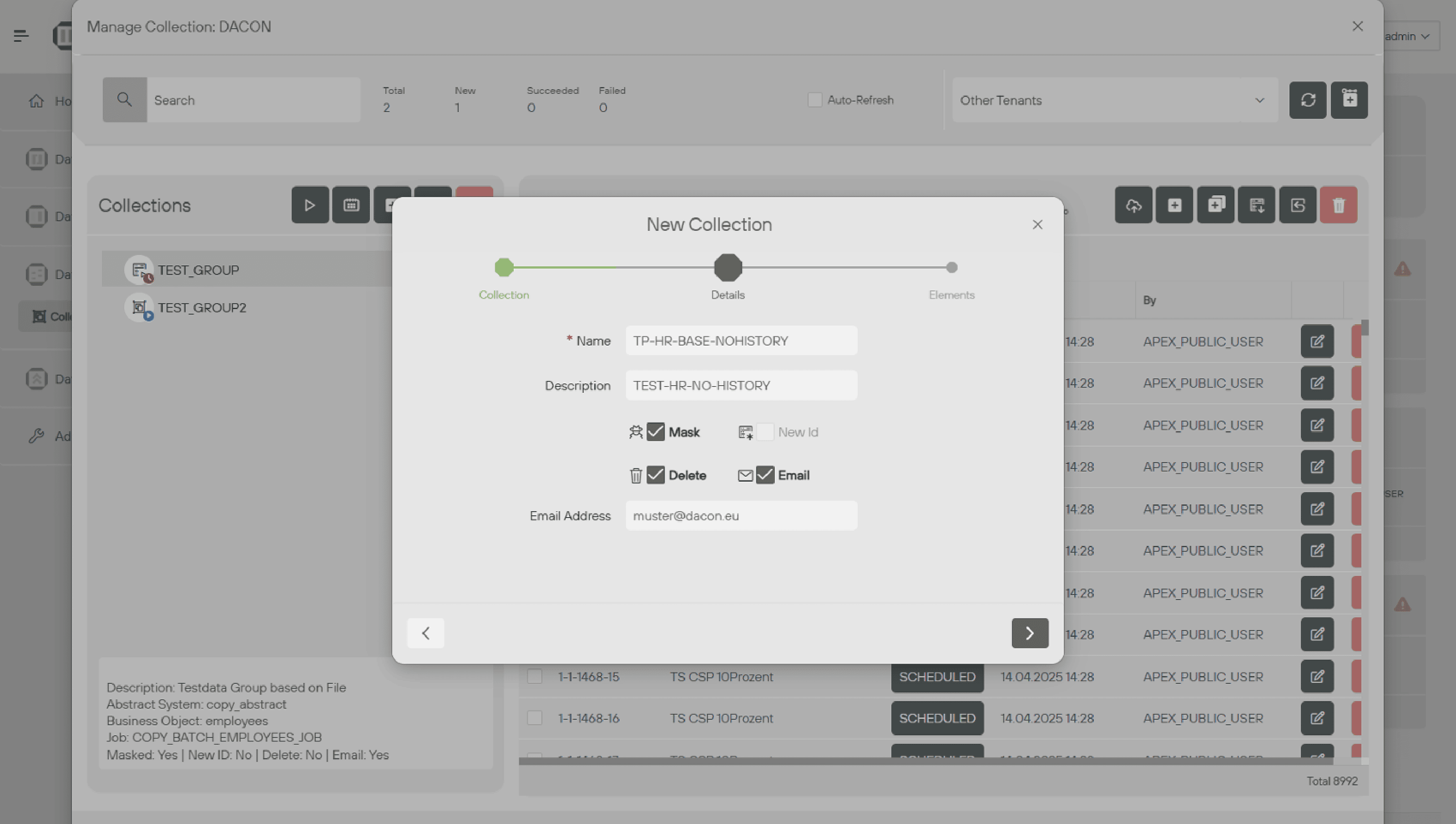

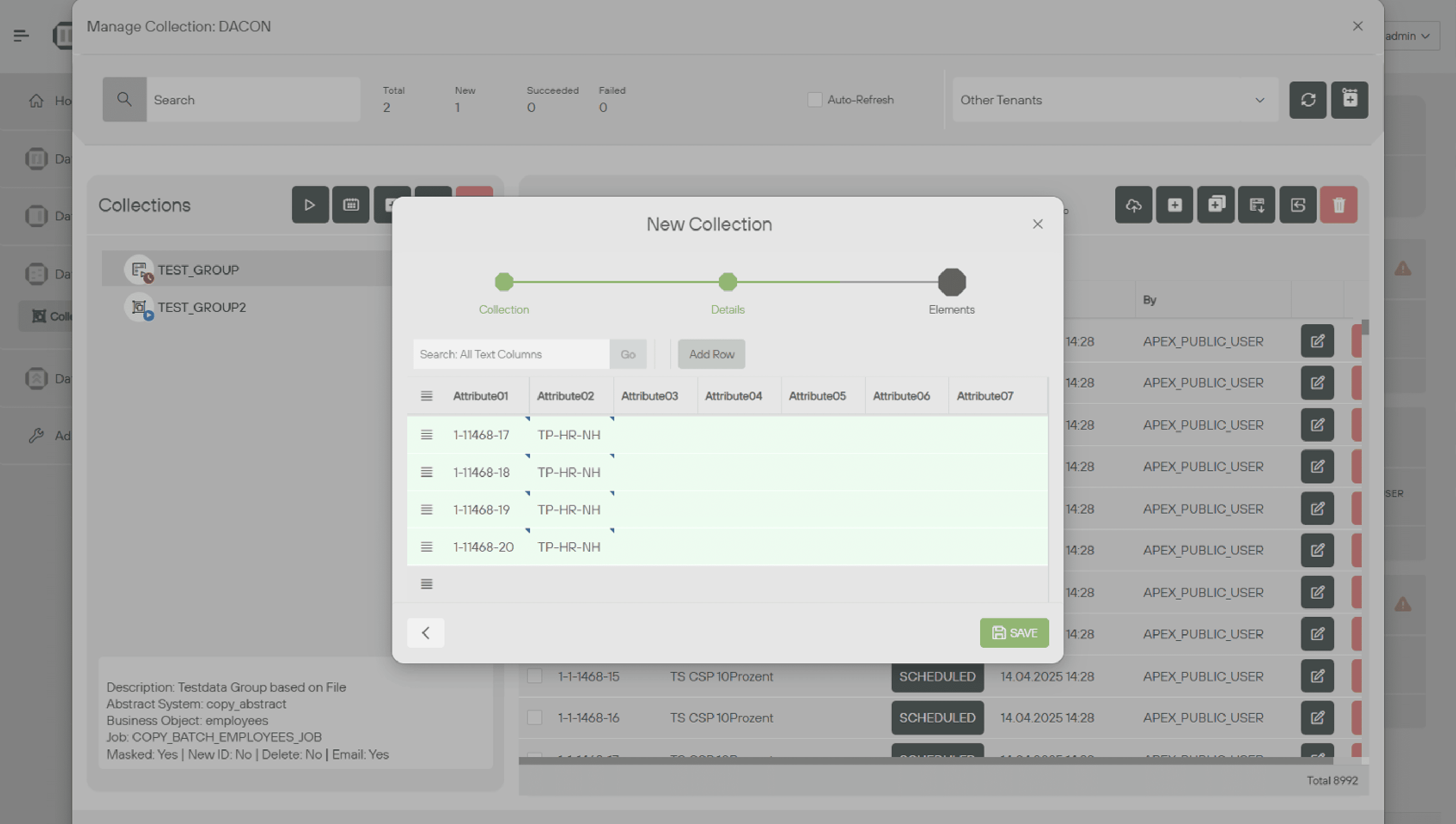

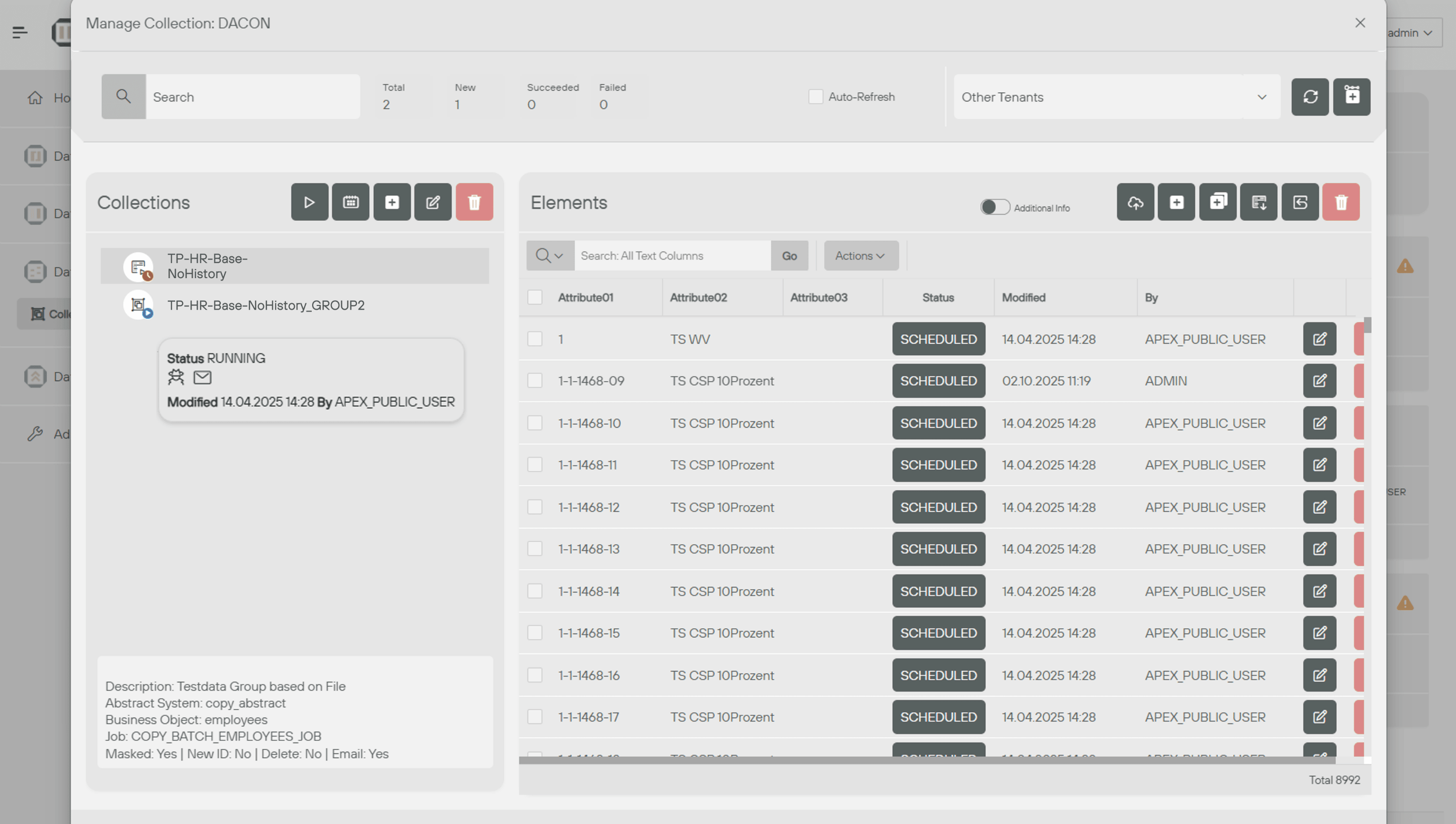

Impressions

Your benefit in a nutshell:

Maximum realism

Test with highly production-related data that precisely maps the complex relationships, dependencies and technical logics of your real data.

Consistent data protection

Work with the assurance that sensitive customer information is reliably protected by proven, GDPR-compliant procedures before they reach your test environment.

Noticeable efficiency

Empower your teams to create exactly the datasets they need through an intuitive process – in minutes instead of weeks.

The logic behind the ease:

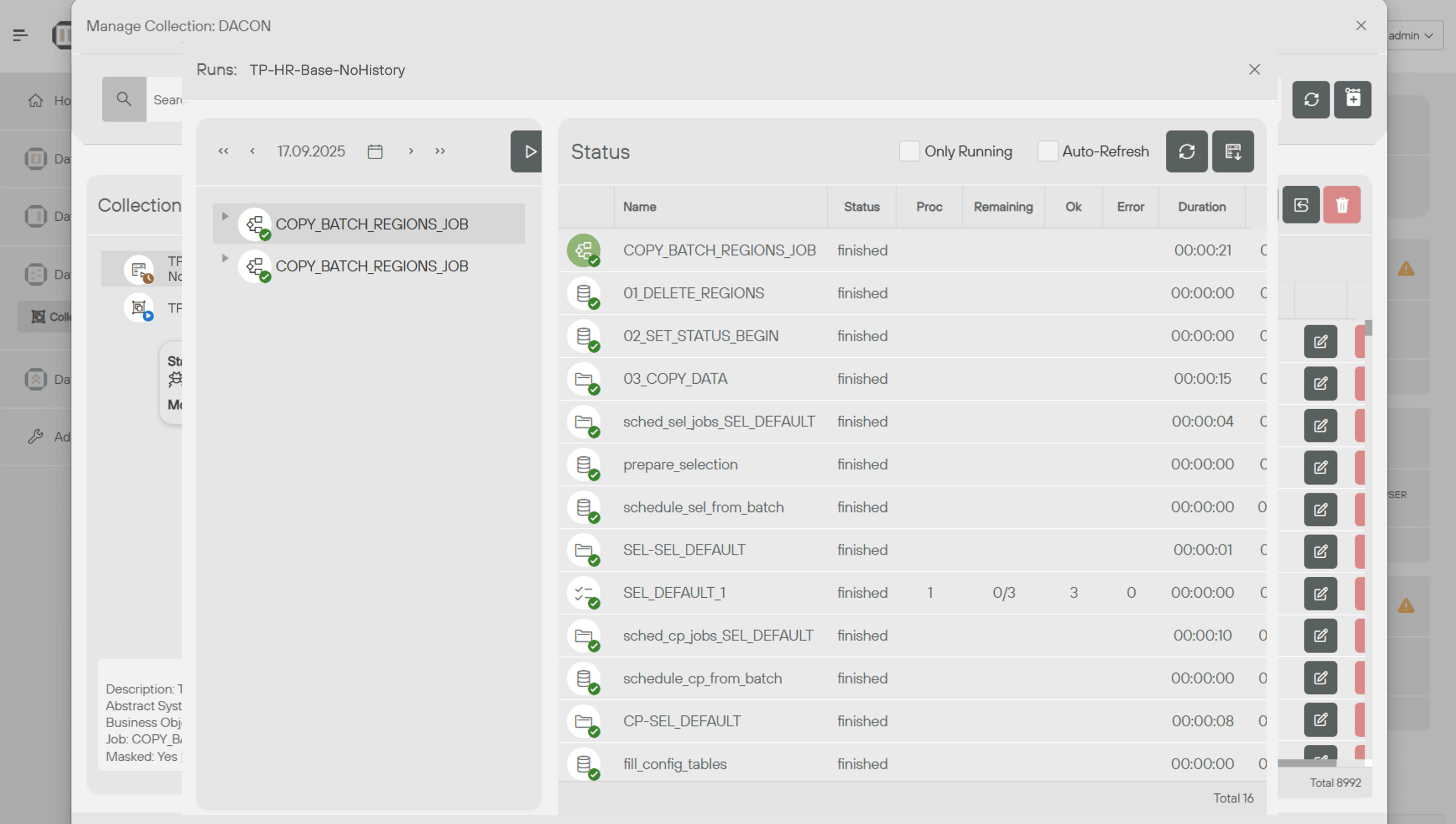

This is how easy it works

1:1 Copy of production-related data

- Creation of test data that replicate production data one-to-one – in terms of structure, scope and distribution of values

- Safe reproduction of production defects in the test environment

- Realistic problem re-enactment and resolution

GDPR-compliant anonymisation

- Integrated mechanisms for data masking and pseudonymization ensure that test data no longer has any personal reference

- Techniques such as pseudonymization, generalization, encryption, etc. are applied so that real customer information is protected

- Highest data protection standards are automatically met with every data creation

Automated deployment

- Test data can be copied and distributed at the push of a button or in a time-controlled manner

- Testers and developers get fresh, consistent test data immediately if necessary – without manual effort

- Specialist users can generate specifically required test data themselves

- The deployment is automated, which minimizes bottlenecks in test data

Flexible Use Cases & Integration

- The solution can be used independently of technology and system

- DataSimulation can copy test data between different systems – depending on the project requirement

- Whether mainframe, cloud or database: the integration into existing IT landscapes is seamless

- This flexibility allows use in various scenarios (development, QA, migration) and facilitates integration into CI/CD pipelines or other automation workflows

Workflow

Anonymization with system:

Realistic data at the touch of a button

Our decisive advantage lies in realistic masking. We do not simply replace sensitive data with meaningless signs, but create a data protection-compliant parallel world that feels like the original. Our tool maintains the technical logic and integrity of your data:

Intelligent pseudonymization

Test with highly production-related data that precisely maps the complex relationships, dependencies and technical logics of your real data.

Logical consistency

A postal code remains a valid, existing postal code that fits the tariff. A date of birth is changed so that age remains within a relevant range. The business logic remains untouched.

Structural fidelity

All relationships between the data (e.g. between customer, contract and claim) remain fully intact, even when creating smaller, highly specific test data sets ("sub-sets").

Do you have questions about the module DataSimulation or would you like to know more about the dacon® DataBridge? Feel free to contact us for further information or personal advice.

Make an appointment nowFurther information on testdata management can be found in our service portfolio:

Testdata-Management